Search & Filter

-

Category

Driving cyber-physical innovation in the UK National Cyber-Physical Infrastructure ecosystem programme report, March 2024 Catapult consortium partners Digital Catapult, Connected Places Catapult...

CReDo is a climate change adaptation digital twin that brings together data from the energy (UK Power Networks), water...

This document forms a Strategic Outline Case for the CReDo project, structured per the Five Case model, a standard...

CReDo Phase 2 final report: Developing decision-support use cases The Phase 2 final report sets out...

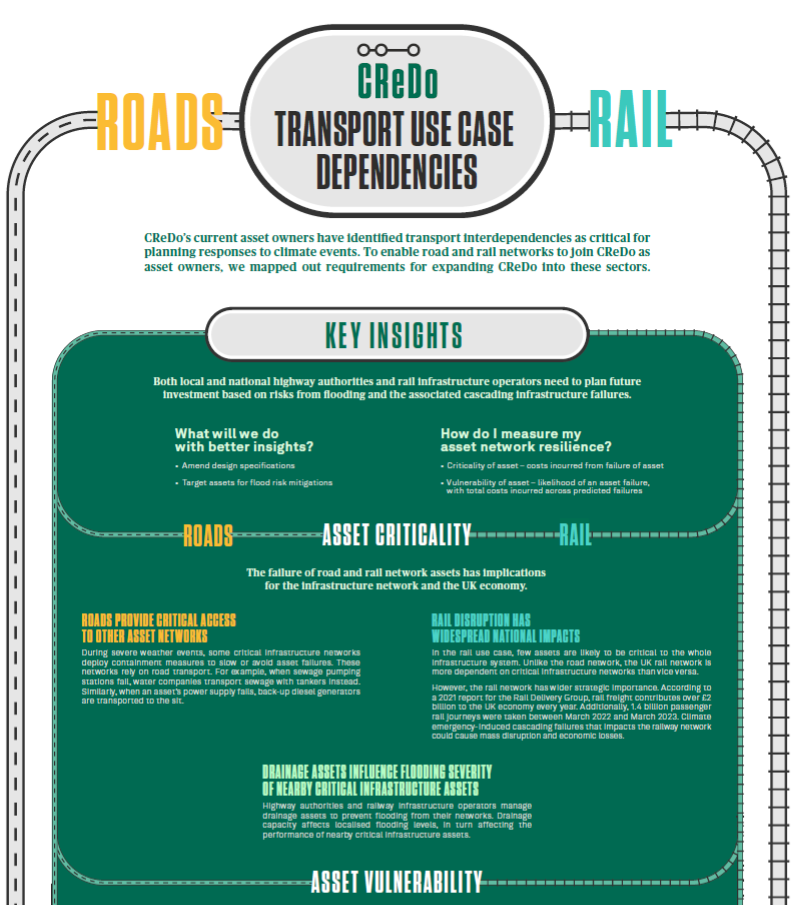

Energy, water, and telecoms are only a subset of the infrastructure networks we need to consider in planning for...

Authored by Tomas Marsh, Systems Engineer at Connected Places Catapult, this report discusses the future development requirements to ready...

This paper has been researched and produced by the Open Data Institute (ODI) in collaboration with its partner, Arup,...

Authored by CReDo’s technical partner CMCL, this report focuses on the implementation made to CReDo during Phase 2 of...

Article by Bentley Systems

Article by Bentley Systems

Article by Bentley Systems

Article from Bentley Systems

Article by Bentley Systems

Article by Bentley Systems

Article by Bentley Systems

Article by Bentley Systems

Article by Bentley Systems

From the Department for Science, Innovation and Technology Executive Summary From collaborative swarms...

22 June 2023, 09:00-17:30 – Hybrid Event Venue: Urban Innovation Centre, 1 Sekforde Street, London EC1R 0BE ...

An Information Management Framework for Environmental Digital Twins (IMFe) Executive summary Environmental science...

Overview brochure. 33 pages. Everything you need to know about the DT Hub and its community...

With the global spend forecast to reach $2.8 trillion in 2025, digital transformation remains at the forefront of organisations'...